At LEAP, we work with many customers who are at the forefront of computational particle modelling / discrete element modelling, with engineers and analysts from industries as diverse as minerals/mining through to agricultural and pharmaceuticals. We’ve certainly seen a request for not only faster solution times but higher fidelity simulations through increased number of particles that customers want to include in their DEM simulations (driven by a desire to more accurately represent particle size distributions, PSDs), as well as increased complexity of the particle shape (using more facets to accurately reflect the true shapes of particles such as rocks and tablets).

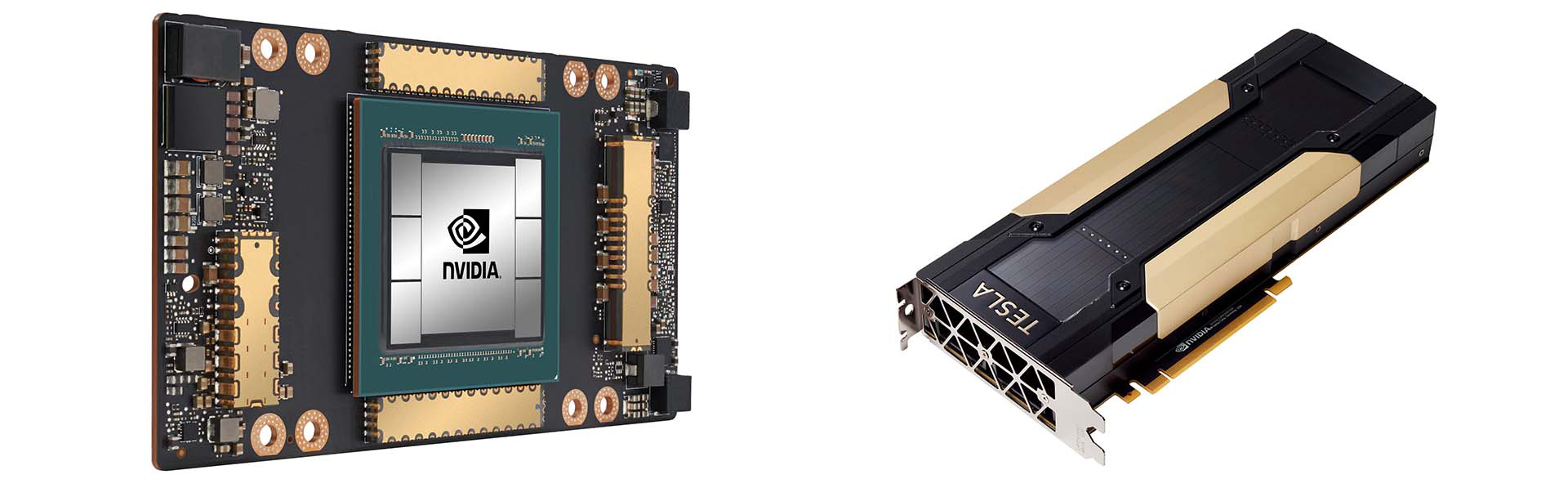

Fortunately, we are currently living through a period where computational hardware is evolving at a rapid pace – over the past 5 years or so, GPU computational capability has increased by several orders of magnitude. We’ve also been fortunate enough to be working with ESSS who develop Rocky DEM which was the first commercial DEM code to offer a dedicated GPU-based solver and later extending this to multiple GPUs. Developers at ESSS had also been observing the same level of interest from Rocky DEM customers worldwide and recently reviewed this topic in detail where they completed a comprehensive set of benchmarks that involved comparing the solve time on an 8-core CPU against single and multi-GPUs – you can see the results summarised here:

In the past, DEM simulations were restricted to relatively small problems that used, for example, only thousands of larger particles that were mostly spherical in shape.

Continual improvements in both DEM codes and computational power have enabled closer-to-reality particle simulations. Users today can expect to simulate problems using the real particle shape and the actual particle size distribution (PSD), creating DEM simulations with many millions of particles.

However, these enhancements in simulation accuracy have come at the cost of increased computational loads in both processing time and memory requirements. Within Rocky DEM, these loads can be offset considerably by using GPU processing abilities, which provides users with the capacity to obtain results in a more practical time frame.

The benefits of GPU

The addition of GPU processing has helped make DEM a practical tool for engineering design. For example, the speed-up experienced by processing a simulation with even an inexpensive gaming GPU is remarkable when compared to a standard 8-core CPU machine working alone.

Since release 4 of Rocky, users have been able to make use of multi-GPU technology capabilities, which facilitates large-scale and/or complicated solutions that were previously impossible to tackle due to memory limitations. By combining the memory of multiple GPU cards at once, users have been able to overcome these limitations and achieve a substantial performance increase by aggregating their computing power.

From an investment perspective, there are many benefits to multi-GPU processing. The hardware cost of running cases with several millions of particles using multiple GPUs is much smaller than buying an equivalent CPU-based machine. The energy consumption is also less with GPUs, and GPU-based machines are also easier to upgrade by adding more cards or buying newer ones.

Moreover, in a world where we push multi-physics simulations ever farther, Rocky GPU and multi-GPU processing enables you to free-up all your CPUs for coupled simulations, avoiding hardware competition.

Performance benchmark

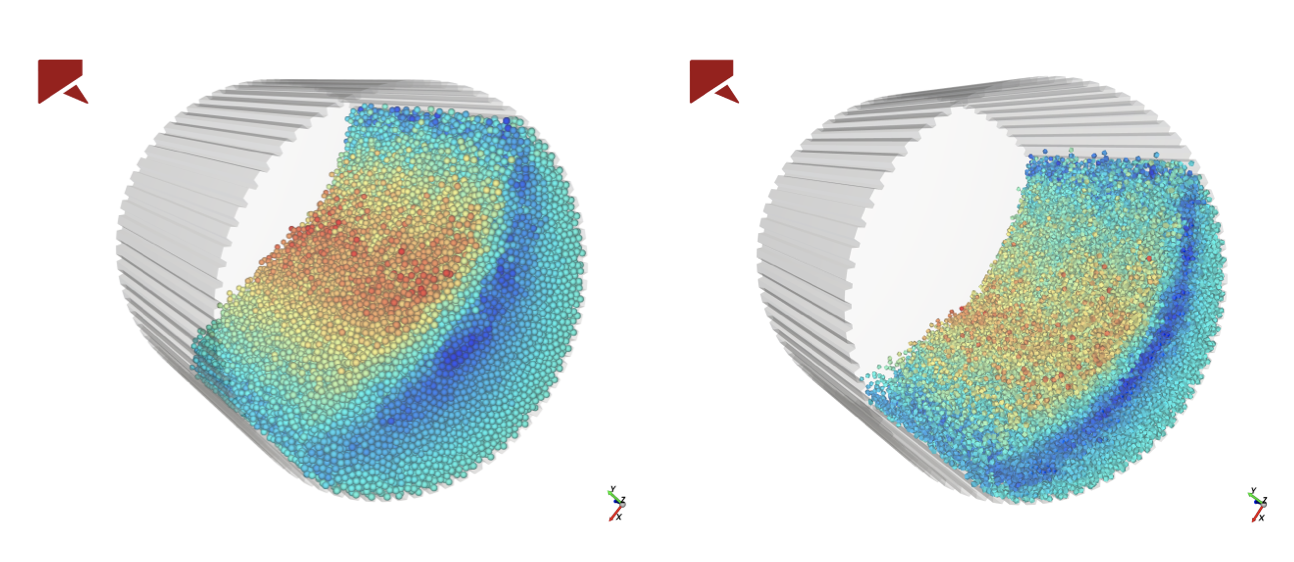

To better illustrate the gains in processing speed that are possible for common applications, a performance benchmark of a rotating drum (Figure 1) was developed. Multiple runs using different criteria were evaluated as explained below.

Figure 1 – Rotating drum benchmark case for spheres (left) and polyhedrons (right).

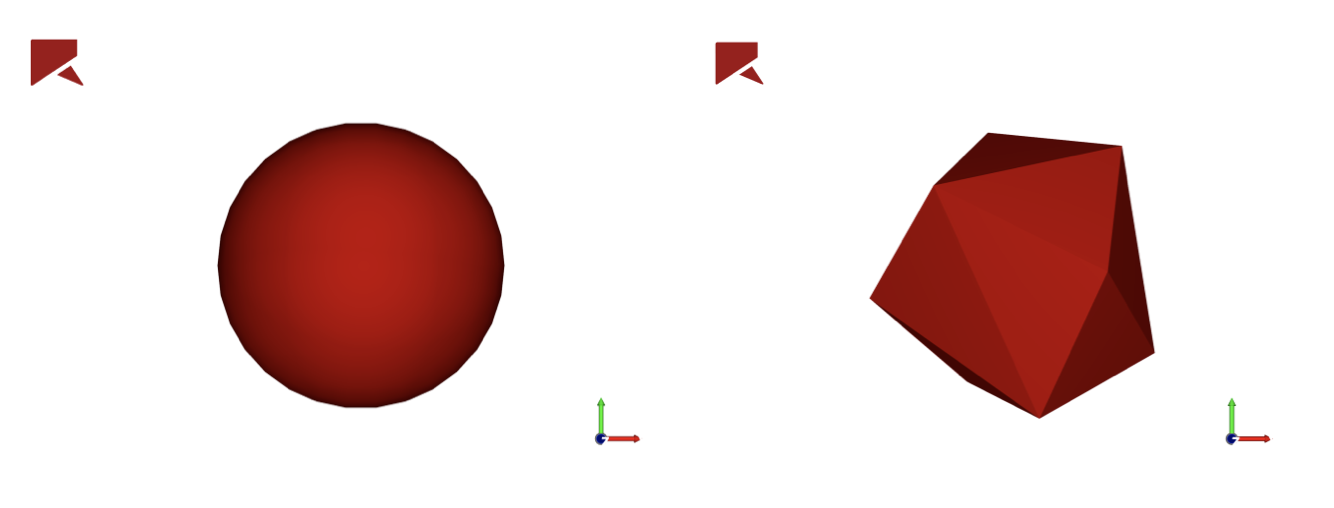

Criteria 1: Particle shape

Two different particle shapes were evaluated at the same equivalent size (Figure 2):

- Spheres

- Polyhedrons (shaped from 16 triangles)

Drum geometry was lengthened as the number of particles increased to keep the material cross section consistent across the various runs.

Figure 2 – Sphere (left) and 16-triangle polyhedron (right) particle shapes used in the benchmark case.

Criteria 2: Processing type

Four different processing combinations were evaluated:

- CPU: Intel Xeon Gold 6230 @ 2.10GHz on 8 cores

- 1 GPU: NVIDIA V100

- 2 GPUs: NVIDIA V100

- 4 GPUs: NVIDIA V100

Criteria 3: Performance measurement

Two measurements were taken at steady state to evaluate performance:

- Simulation Pace, which is the amount of hardware processing time (duration) required to advance the simulation one second. In general, a lower simulation pace indicates faster processing.

- GPU Memory Usage, which is the amount of memory being used on the GPU while processing the simulation. In general, a lower memory usage allows for more particles to be processed, and/or more calculations to be performed.

Check out this infographic to see how the GPU and multi-GPU processing capabilities available in Rocky DEM can help you speed up your particle simulations regardless of the size of your business.

Benchmark results for Rocky 4.5

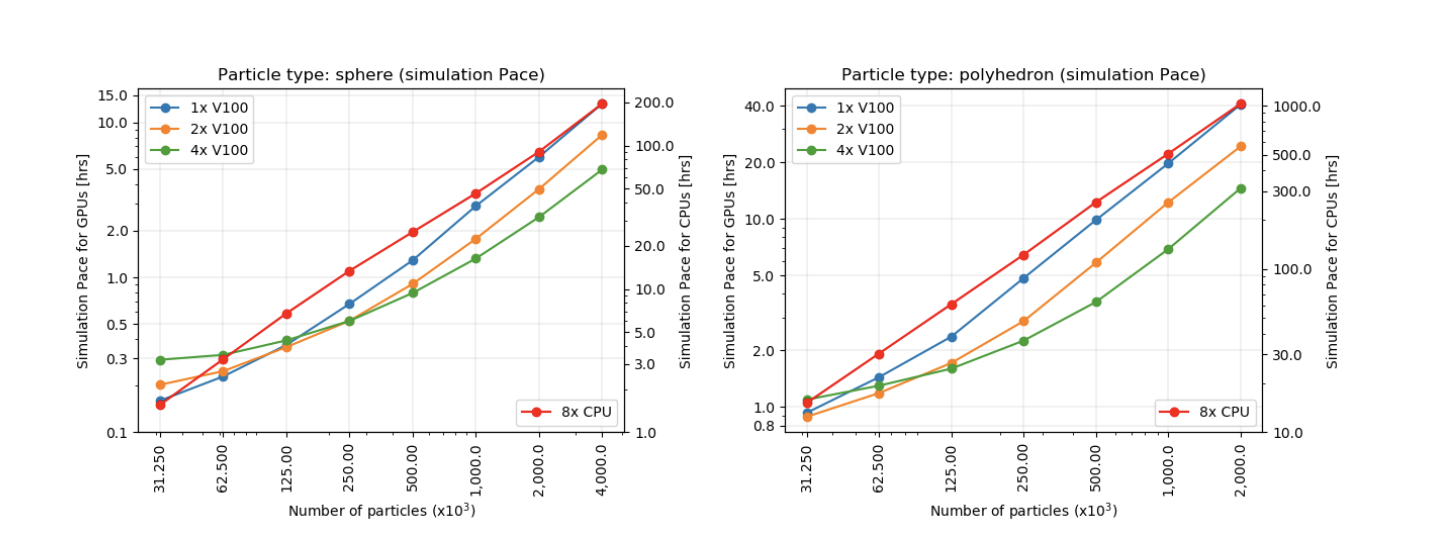

Figure 3 shows the 4.5 simulation pace for both particle shapes. Note that there is a secondary axis to present the values for CPU cases due to the high values compared with GPU cases. The pace for CPU shows a linear aspect for all cases and this behavior is different for GPU cases, where there is a transition until GPU presents a linear region. It is important to be aware of this region so that you select the best GPU resources for each case.

Figure 3 – Simulation Pace obtained using spheres (left) and polyhedrons (right).

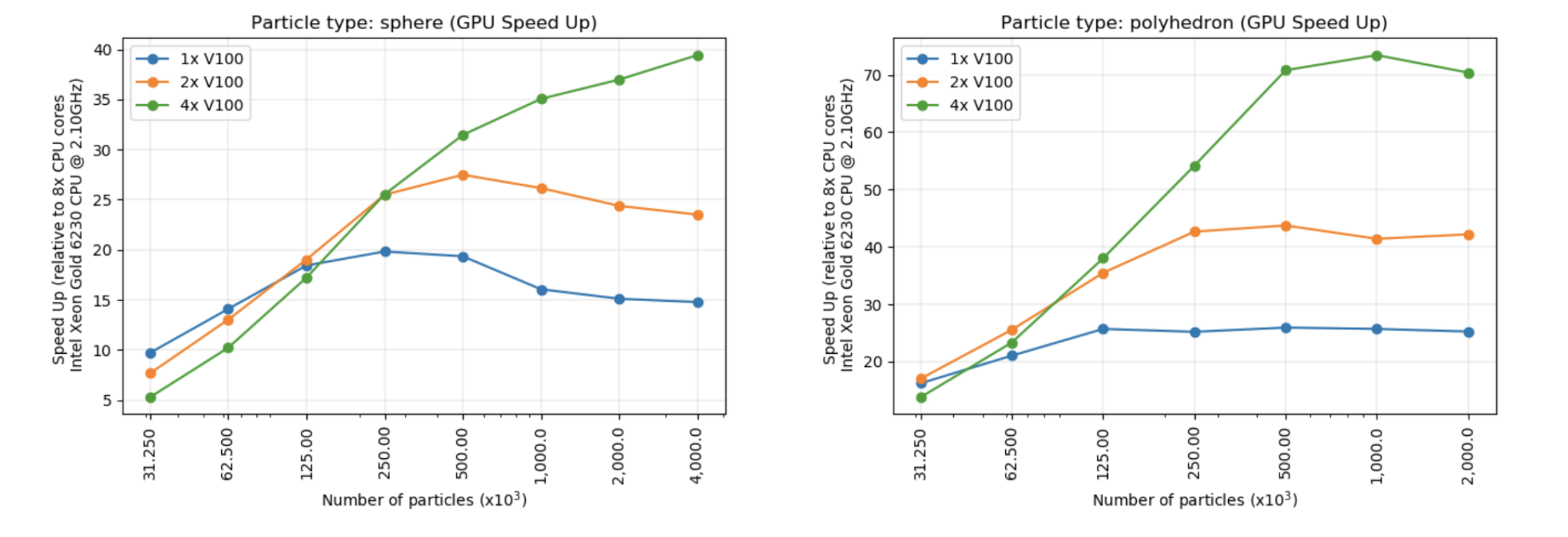

Relevant conclusions on simulation performance (Figure 4):

Figure 4 – GPU speed up based upon Simulation Pace (compared with CPU – 8 cores) achieved using spheres (left) and polyhedrons (right).

- Results show a significant performance gain with multi-GPU versus CPU simulations: up to 70 times faster for polyhedrons and 40 times faster for spheres when comparing 4 GPUs with an 8-core CPU.

- GPU maximum gain is achieved when using approximately 250K or more particles per GPU for both sphere and polyhedron shape types.

- Scalability is preserved when the number of particles is increased.

The following plots show performance improvement for spheres and polyhedrons for different numbers of particles using different numbers of GPUs (1x, 2x, and 4x).

For speed tests and hardware recommendations, see Rocky with Multi-GPU: Which Hardware is Best for You?

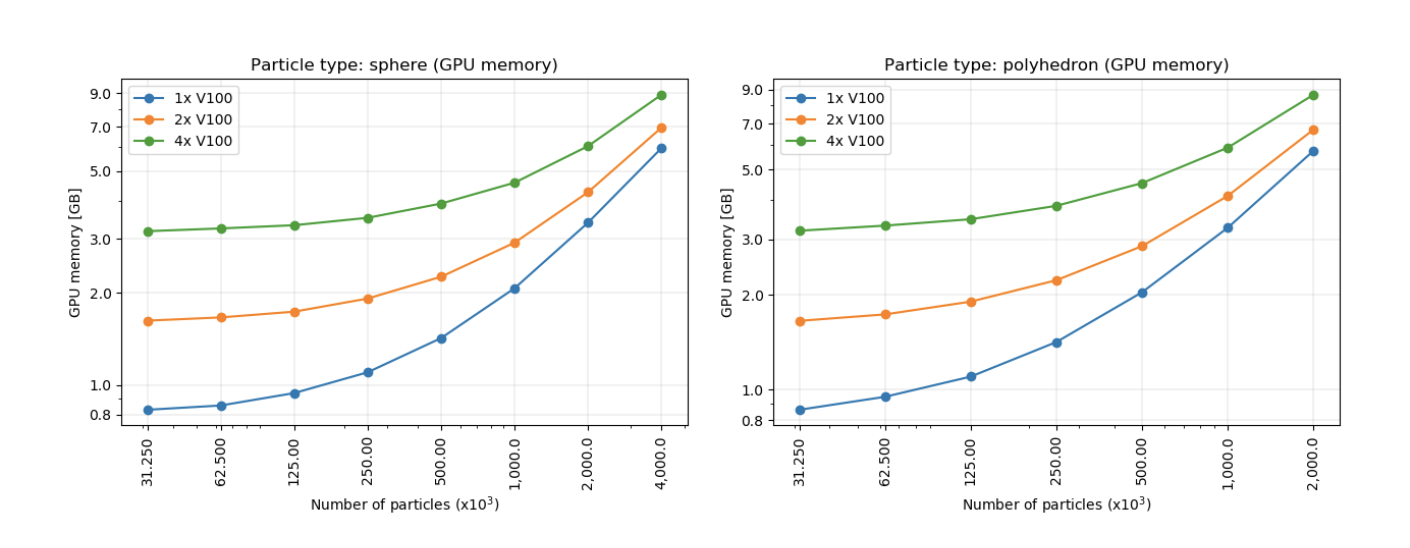

Relevant conclusions on GPU memory consumption (Figure 5):

Figure 5 – GPU memory consumption using spheres (left) and polyhedrons (right).

- Memory consumption per million particles is approximately 1GB for spheres and 2GB for polyhedrons.

Note: This ratio is just a general guideline and can vary with case behavior, setup, and enabled models. - There is an initial memory consumption of about 800MB per GPU for general kernel allocation and simulation management. This is on the top of the aforementioned particle memory allocation.

See also: GPU Buying Guide and FAQs

Rocky 4.5 compared to Rocky 4.4

When these benchmark results were compared to the result obtained in the previous release of Rocky, some important conclusions were observed:

- For 1 million+ particles, the new 4.5 release achieved an impressive pace reduction of around 50% for spheres and 20% for polyhedrons.

- The new 4.5 release reduced memory consumption by approximately 30% overall.